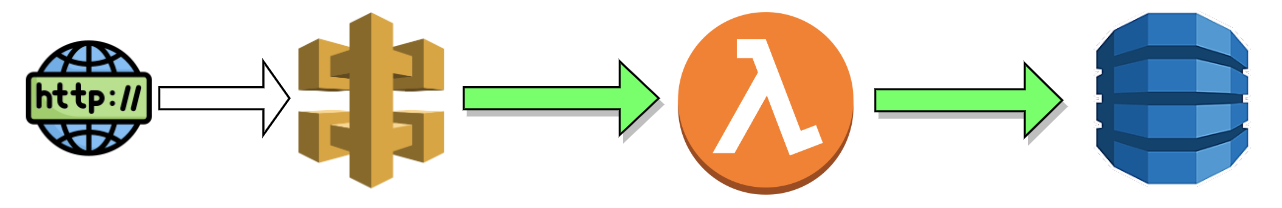

By Nobukimi Sasaki (2023-04-24) Continued from API Gateway + Lambda + DynamoDB (Configuration)

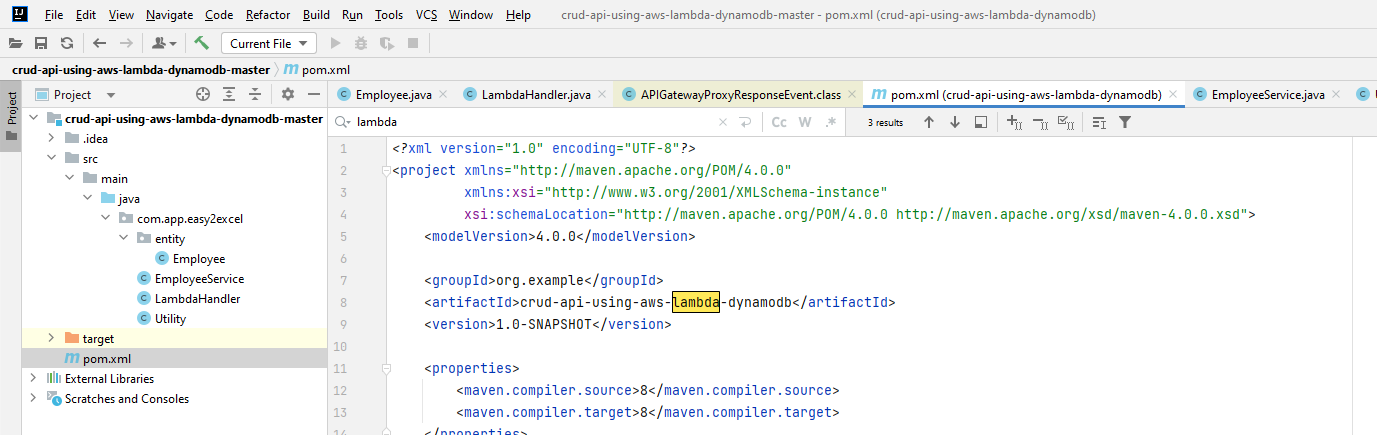

Dependency for AWS Lambda

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-core</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-events</artifactId>

<version>2.0.2</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-dynamodb</artifactId>

<version>1.11.271</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.14.1</version>

</dependency>

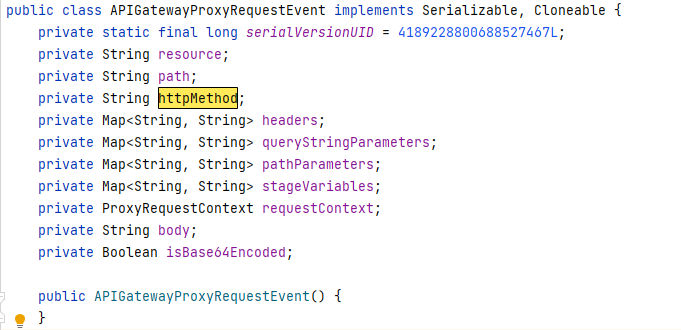

The main class receive the request:APIGatewayProxyRequestEvent::getHttpMethod.

import com.amazonaws.services.dynamodbv2.AmazonDynamoDB;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClientBuilder;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapper;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBScanExpression;

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.RequestHandler;

import com.amazonaws.services.lambda.runtime.events.APIGatewayProxyRequestEvent;

import com.amazonaws.services.lambda.runtime.events.APIGatewayProxyResponseEvent;

public class LambdaHandler implements RequestHandler<APIGatewayProxyRequestEvent, APIGatewayProxyResponseEvent> {

@Override

public APIGatewayProxyResponseEvent handleRequest ( APIGatewayProxyRequestEvent apiGatewayRequest, Context context ) {

EmployeeService employeeService = new EmployeeService();

switch (apiGatewayRequest.getHttpMethod()) {

case "POST":

return employeeService.saveEmployee( apiGatewayRequest, context );

case "GET":

if (apiGatewayRequest.getPathParameters() != null) {

return employeeService.getEmployeeById( apiGatewayRequest, context );

}

return employeeService.getEmployees( apiGatewayRequest, context );

case "DELETE":

if (apiGatewayRequest.getPathParameters() != null) {

return employeeService.deleteEmployeeById( apiGatewayRequest, context );

}

default:

throw new Error( "Unsupported Methods:::" + apiGatewayRequest.getHttpMethod() );

}

}

}

The 1st parameter, APIGatewayProxyRequestEvent contains:

This getHttpMethod returns String httpMethod that is “GET”, “POST”, “PUT”,,,,

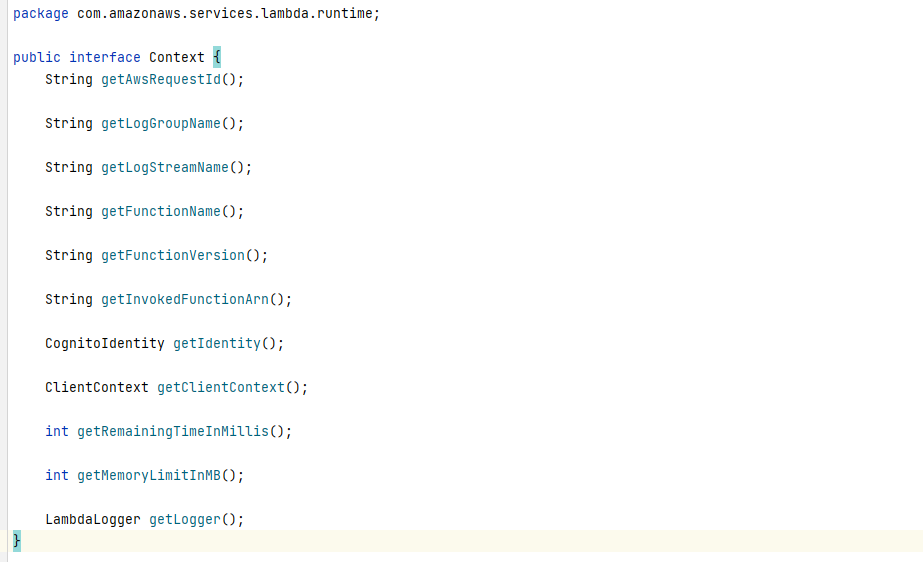

The 2nd parameter, Context contains:

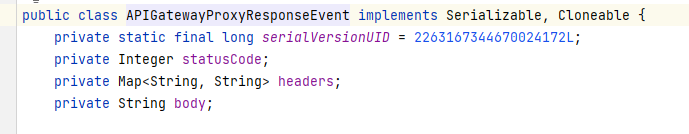

The response class “APIGatewayProxyResponseEvent” contains:

Saving data:

import com.amazonaws.services.dynamodbv2.AmazonDynamoDB;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClientBuilder;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapper;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBScanExpression;

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.events.APIGatewayProxyRequestEvent;

import com.amazonaws.services.lambda.runtime.events.APIGatewayProxyResponseEvent;

import com.app.easy2excel.entity.Employee;

import java.util.List;

import java.util.Map;

public class EmployeeService {

private DynamoDBMapper dynamoDBMapper;

private static String jsonBody = null;

public APIGatewayProxyResponseEvent saveEmployee ( APIGatewayProxyRequestEvent apiGatewayRequest, Context context ) {

initDynamoDB();

Employee employee = Utility.convertStringToObj( apiGatewayRequest.getBody(), context );

dynamoDBMapper.save( employee );

jsonBody = Utility.convertObjToString( employee, context );

context.getLogger().log( "data saved successfully to dynamodb:::" + jsonBody );

return createAPIResponse( jsonBody, 201, Utility.createHeaders() );

}

....

Within the createAPIResponse, it returns the APIGatewayProxyResponseEvent:

private APIGatewayProxyResponseEvent createAPIResponse ( String body, int statusCode, Map<String, String> headers ) {

APIGatewayProxyResponseEvent responseEvent = new APIGatewayProxyResponseEvent();

responseEvent.setBody( body );

responseEvent.setHeaders( headers );

responseEvent.setStatusCode( statusCode );

return responseEvent;

}

The entity model:

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBAttribute;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBHashKey;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBTable;

@DynamoDBTable(tableName = "employee")

public class Employee {

@DynamoDBHashKey(attributeName = "empId")

private String empId;

@DynamoDBAttribute(attributeName = "name")

private String name;

@DynamoDBAttribute(attributeName = "email")

private String email;

....

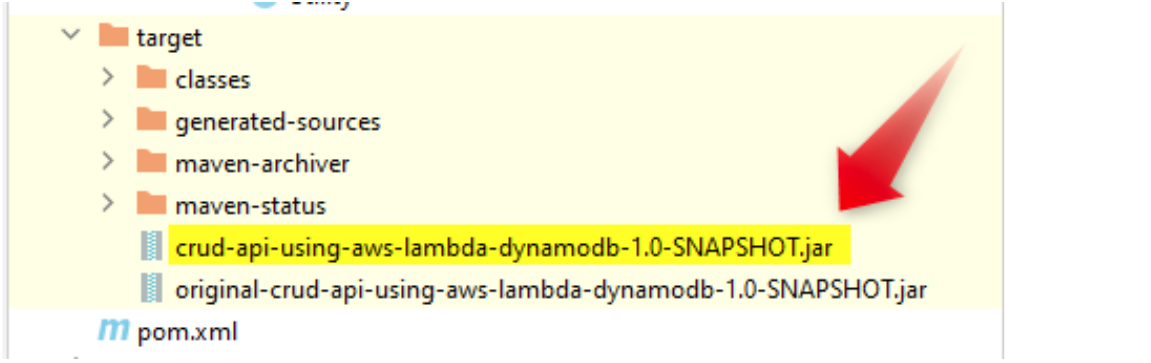

How to deploy the code:

Go to intellij terminal, as command line >mvn clean install

The jar file is created under target:

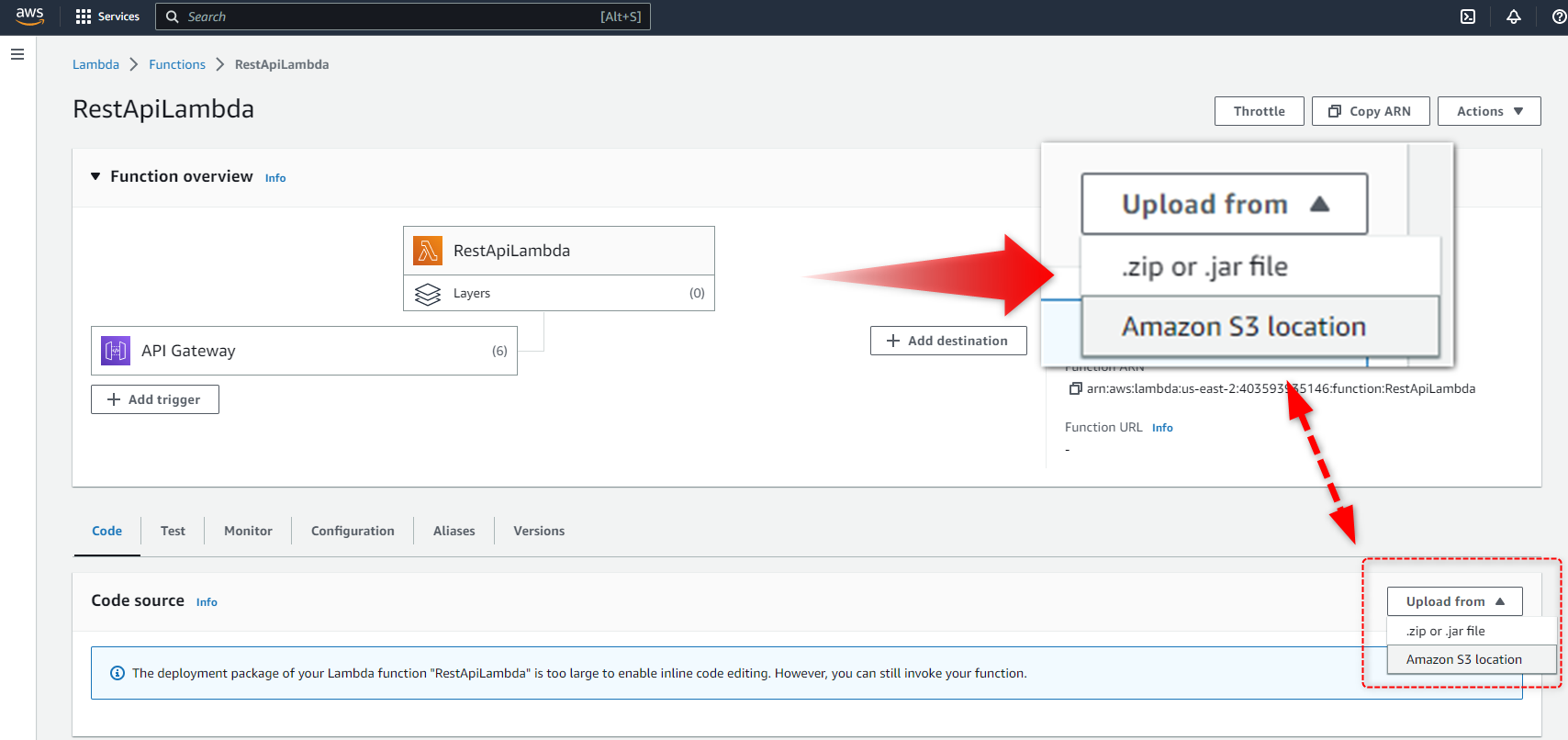

After specifying the built jar file, then upload.

Lambda > Functions > RestApiLambda (your function name) … There is a “Upload From” button:

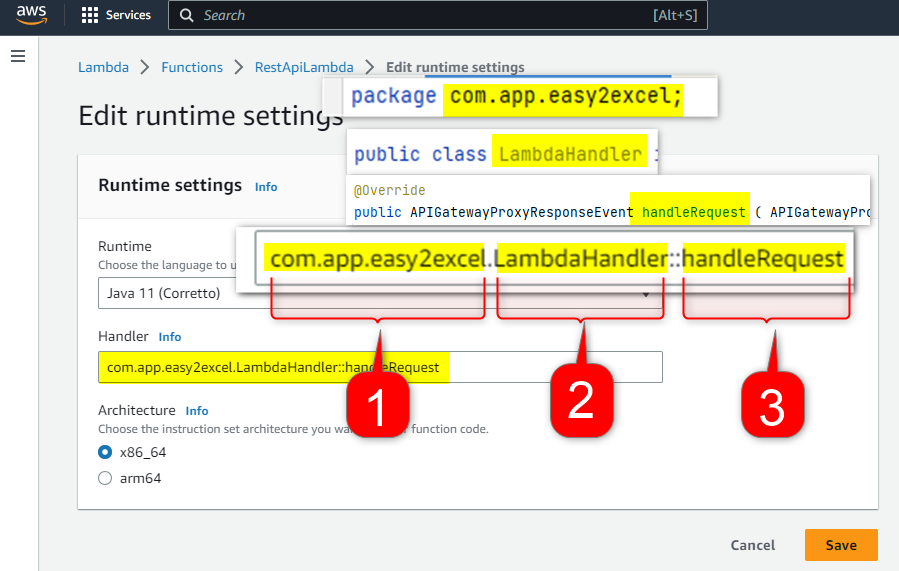

Scroll down and under Runtime settings > Edit

Edit the header info as:

1. Package path

2. Class name

3. Method name

Then Save! Now the API is ready to run

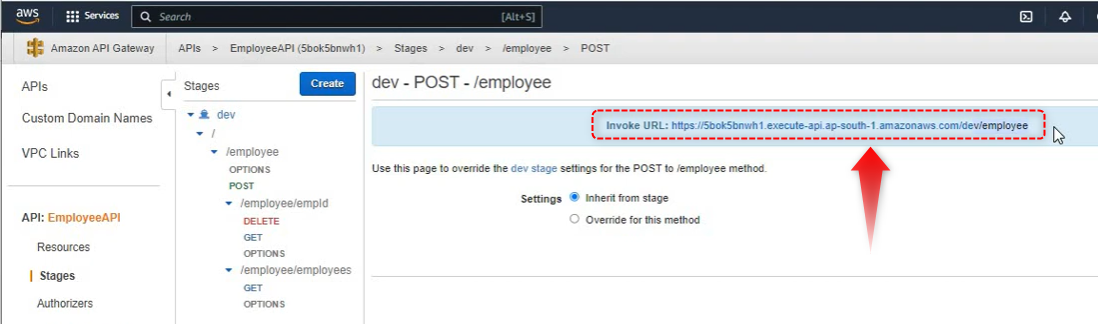

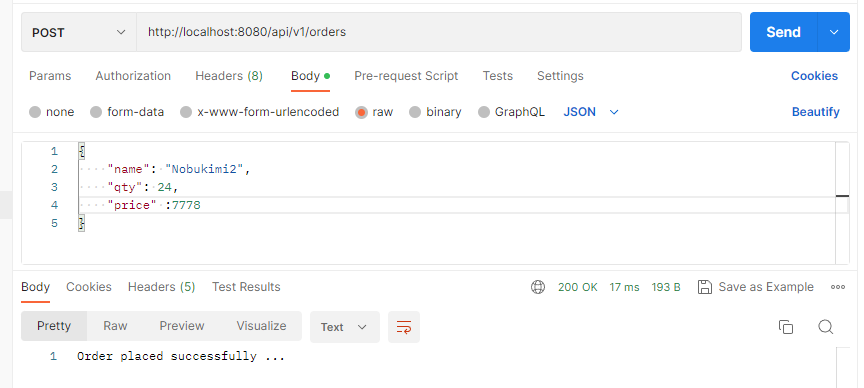

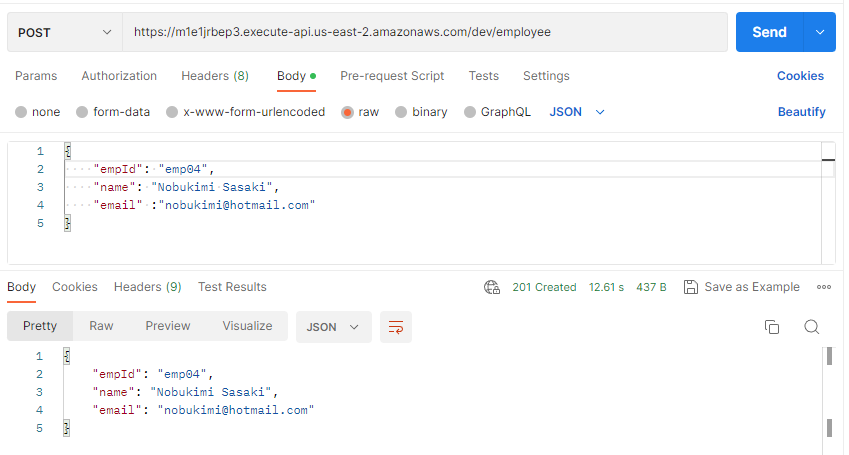

In Postman, the POST request went succesfully:

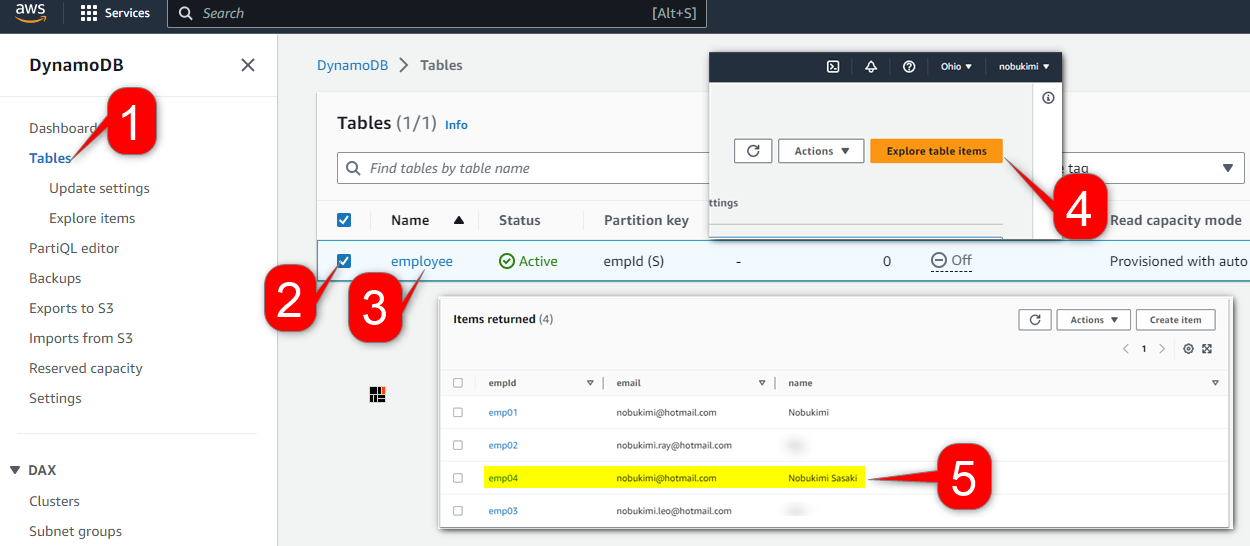

Go to dashboard DynamoDB > Tables

1. Tables

2. Check

3. Click the table name

4. Explore the items

5. You will see the data created